With the recent advancements in machine learning and natural language processing, large language models (LLMs) have gained significant attention and popularity. These models are powerful tools that can generate human-like text, and their applications range from content creation to sentiment analysis. In this article, we’ll explore the basics of large language models, their working principles, popular examples, and their applications.

What are Large Language Models?

A language model is a statistical model designed to predict the probability of a sequence of words. Large language models are a type of artificial neural network trained on vast amounts of text data. These models have a massive number of parameters, enabling them to learn complex patterns in language. By leveraging this training, they can generate text that is coherent, grammatically correct, and contextually appropriate.

One defining feature of LLMs is their ability to generate human-like text, translate between languages, and answer questions based on provided context. This makes them invaluable in a variety of natural language processing (NLP) tasks.

How Do Large Language Models Work?

Large language models operate using unsupervised learning, where they analyze large datasets without predefined labels or specific targets. Instead, the model learns patterns within the data and uses this understanding to generate new, similar data.

Training Process

- Data Preparation: A large corpus of text is collected and preprocessed.

- Model Training: The model optimizes its parameters to minimize differences between predicted and actual text sequences in the training corpus.

- Inference: The trained model generates new text based on a given starting sequence, predicting subsequent words to create coherent output.

Key Architectures in LLMs

Several neural network architectures contribute to LLM development, including:

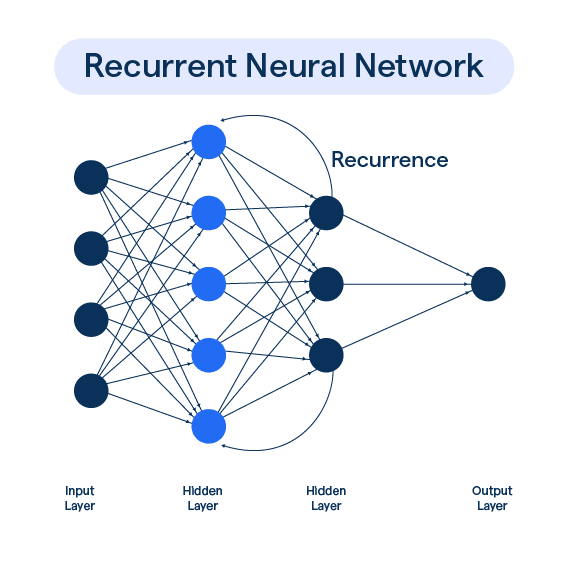

- Recurrent Neural Networks (RNNs): These are used for sequential data but can struggle with long-term dependencies.

- Long Short-Term Memory (LSTM) Networks: A type of RNN designed to address issues with long-term memory.

- Transformers: Introduced in the 2017 paper “Attention Is All You Need”, this architecture revolutionized NLP by using self-attention mechanisms for processing sequences more efficiently. It forms the foundation for many modern LLMs.

Popular Examples of Large Language Models

GPT-3

GPT-3 (Generative Pre-trained Transformer 3) by OpenAI boasts 175 billion parameters. It excels in generating human-like text, language translation, and answering context-based questions.

BERT

BERT (Bidirectional Encoder Representations from Transformers) by Google has 340 million parameters. It is designed to understand sentence context and generate coherent and grammatically correct text.

T5

T5 (Text-to-Text Transfer Transformer), also by Google, has 11 billion parameters. It is trained for various NLP tasks, including text classification, generation, and translation.

Applications of Large Language Models

LLMs are applied across numerous fields due to their ability to understand and generate language-based data. Some notable applications include:

- Language Translation: Tools like Google Translate use LLMs to provide accurate translations.

- Question Answering: Models like BERT enable answering queries based on context.

- Text Summarization: LLMs can condense lengthy documents into concise summaries.

- Content Creation: They assist in generating marketing and advertising copy.

- Sentiment Analysis: LLMs analyze text to determine emotional tone, such as positive or negative sentiment.

Advancements in Large Language Models

The development of large language models has been driven by continuous research and technological advancements. The introduction of the transformer architecture marked a significant milestone, allowing the creation of models with billions of parameters. These advancements have improved LLM capabilities, including enhanced text coherence and contextual understanding

Limitations of Large Language Models

Despite their impressive capabilities, LLMs have limitations:

- Bias: Since they are trained on large text datasets, any biases in the data can manifest in the generated text.

- Lack of True Understanding: While they can generate contextually accurate text, they do not possess true comprehension or reasoning capabilities.

Conclusion

Large language models represent a significant leap forward in the field of artificial intelligence and natural language processing. With their ability to generate human-like text and power various NLP applications, they are transforming industries and redefining how we interact with technology. However, as these models evolve, addressing their limitations will be crucial for ethical and effective deployment.

Want to read more interesting blogs like this……Visit https://www.codersbrain.com/blog/